Imagine this: you’re wearing an augmented reality (AR) headset in a museum, pointing at a sculpture, and suddenly your display overlays historical context, related videos, and interactive elements.

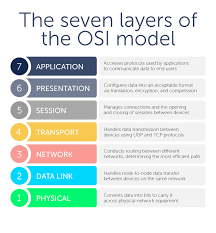

Behind that smooth experience lies a complex chain of communication. That chain traces down through the network architecture built on the OSI framework.

If any link breaks, the illusion falters—lag appears, data mismatches, or nothing appears at all.

In other words, for augmented reality to feel seamless, every layer in the OSI stack—from physical wires (or wireless signals) up to application software—must perform reliably.

Let’s walk through various AR layers and the future of AR solutions and how AR solutions map onto the OSI model.

Layer 1: Physical — Foundations Under the AR Experience

At the very base of the stack is the physical layer. For AR, that means the hardware, cables or wireless spectrum, sensors, cameras, headsets, smartphones.

The raw bits translate into real‑world phenomena—light pulses, RF signals, electrical voltages. Degradation of this layer (due to poor signal, faulty wiring) means the AR experience breaks. That headset might lose tracking, or visual overlays might pop out.

Even though you’re focused on the virtual elements, the physical layer is doing the heavy lifting behind the scenes.

Layer 2: Data Link — Making Local Connections Reliable

When your AR headset talks to a nearby hub or smartphone, the datalink layer kicks in. This layer handles node-to-node communications, frames data, corrects local errors—-think of it as the handshake between hardware components.

In AR, that could mean the WiFi or Bluetooth link between the device and router or between sensors and proces-sor.

If this layer fails or is poorly optimized, you’ll see increased latency, dropped frames, or choppy visuals because local interplay between sensors, device, and display is disrupted.

Layer 3: Network — Routing Virtual Content Through Paths

Next up: the network layer. Here, data packets are routed between devices, across the internet or local networks. Consider an AR app that pulls 3D models from a cloud server — the network layer ensures packets travel from that server to your headset.

If network addresses aren’t resolved, or routing is inefficient, you’ll experience buffering or incomplete overlays. AR is a high bandwidth, low latency beast — so this layer must be optimized to maintain seamless immersion-.

Layer 4: Transport — Ensuring Delivery with AR Data Streams

The transport layer deals with end-to-end- delivery reliability and ordering. AR streams can include video, sensor data, positional tracking, user inputs.

Some of that needs guaranteed delivery (e.g., positional data), other parts are time sensitive- (video frames) and may tolerate minimal loss.

The transport layer keeps things organized — segments data, manages retransmission if needed, provides flow control. If this layer garbles, you’ll see jitter, lag, or mismatched visuals.

Layer 5: Session — Building Context for the AR Experience

Within the AR ecosystem, the session layer manages the dialogues: device to server, sensor to renderer, user session to cloud service. When you launch the AR app, that session is established, kept alive, and eventually torn down cleanly.

If session management fails, you might lose tracking, experience unexpected log-outs or reconnections, or find the device still “thinking” it’s connected when it’s not — resulting in virtual content that lags or doesn’t sync with the real‑world context-.

Layer 6: Presentation — How the AR Data Looks and Is Formatted

Here we enter the realm of formatting, translation, encryption. For AR, the presentation layer ensures data is rendered correctly: 3D model formats align, textures load, encryption of user data happens, vertical sync timing is kept.

– If this layer is mishandled, AR overlays may appear distorted, miscolored, or fail to decrypt critical data, causing misalignment between the virtual and real worlds or security/privacy issues.

Layer 7: Application — The User Facing- AR Magic

Finally, the application layer. This is where the end-user- interacts: the AR app itself, the user interface, voice commands, gestures, overlay logic. It ties into AR engines, cloud services, user accounts.

It’s what users see and do. But this layer only works well if the six layers below are solid. If application data can’t travel properly, if sensors lag, or network drops occur, the AR assumption (that virtual overlays align with the real world seamlessly) collapses.

Looking Ahead: AR Scale and the Future Layers

As Augmented Reality grows—edge computing, 5G rollouts, massive multi-user AR sessions—each of the OSI model layers becomes more important.

Physical layer might include mmWave radios, datalink could be mesh networks, network layer might rely on edge routing, transport layer may use ultralow latency protocols, presentation layer might lean heavily on AI driven- compression.

Understanding how every piece fits matters more than ever.

Final Thoughts

What seems like smooth magic on your AR device is the result of countless components working together.

The OSI model layers give us a blueprint for that cooperation—from sensors and signals, to frames and protocols, to overlays and experience. And when you apply that blueprint consciously to Augmented Reality, the result isn’t just flash—it’s reliable, immersive reality.